Codeninja 7B Q4 How To Use Prompt Template

Codeninja 7B Q4 How To Use Prompt Template - These are the parameters and prompt i am using for llama.cpp: Gptq models for gpu inference, with multiple quantisation parameter options. You need to strictly follow prompt templates and keep your questions short. Formulating a reply to the same prompt takes at least 1 minute: It focuses on leveraging python and the jinja2 templating engine to create flexible, reusable prompt structures that can incorporate dynamic content. Users are facing an issue with imported llava: I'm testing this (7b instruct) in text generation web ui and i noticed that the prompt template is different than normal llama2. If there is a </s> (eos) token anywhere in the text, it messes up. We will need to develop model.yaml to easily define model capabilities (e.g. Available in a 7b model size, codeninja is adaptable for local runtime environments. I understand getting the right prompt format is critical for better answers. Available in a 7b model size, codeninja is adaptable for local runtime environments. Formulating a reply to the same prompt takes at least 1 minute: Users are facing an issue with imported llava: These files were quantised using hardware kindly provided by massed compute. We will need to develop model.yaml to easily define model capabilities (e.g. Thebloke gguf model commit (made with llama.cpp commit 6744dbe) a9a924b 5 months. You need to strictly follow prompt. Hermes pro and starling are good chat models. Deepseek coder and codeninja are good 7b models for coding. Users are facing an issue with imported llava: We will need to develop model.yaml to easily define model capabilities (e.g. Provided files, and awq parameters i currently release 128g gemm models only. Available in a 7b model size, codeninja is adaptable for local runtime environments. Using lm studio the simplest way to engage with codeninja is via the quantized versions. Chatgpt can get very wordy sometimes, and. We will need to develop model.yaml to easily define model capabilities (e.g. I understand getting the right prompt format is critical for better answers. Thebloke gguf model commit (made with llama.cpp commit 6744dbe) a9a924b 5 months. The tutorial demonstrates how to. Description this repo contains gptq model files for beowulf's codeninja 1.0. Chatgpt can get very wordy sometimes, and. We will need to develop model.yaml to easily define model capabilities (e.g. Using lm studio the simplest way to engage with codeninja is via the quantized versions on lm studio. In lmstudio, we load the model codeninja 1.0 openchat 7b q4_k_m. Thebloke gguf model commit (made with llama.cpp commit 6744dbe) a9a924b 5 months. Using lm studio the simplest way to engage with codeninja is via the quantized versions on lm studio. Available in a 7b model size, codeninja is adaptable for local runtime environments. Here are all example prompts easily to copy, adapt and use for yourself (external link, linkedin) and. Chatgpt can get very wordy sometimes, and. This repo contains gguf format model files for beowulf's codeninja 1.0 openchat 7b. Gptq models for gpu inference, with multiple quantisation parameter options. Known compatible clients / servers gptq models are currently supported on linux. The tutorial demonstrates how to. You need to strictly follow prompt templates and keep your questions short. Thebloke gguf model commit (made with llama.cpp commit 6744dbe) a9a924b 5 months. It focuses on leveraging python and the jinja2 templating engine to create flexible, reusable prompt structures that can incorporate dynamic content. Hermes pro and starling are good chat models. Available in a 7b model size, codeninja. If there is a </s> (eos) token anywhere in the text, it messes up. These files were quantised using hardware kindly provided by massed compute. You need to strictly follow prompt templates and keep your questions short. Ensure you select the openchat preset, which incorporates the necessary prompt. Using lm studio the simplest way to engage with codeninja is via. Deepseek coder and codeninja are good 7b models for coding. If there is a </s> (eos) token anywhere in the text, it messes up. Formulating a reply to the same prompt takes at least 1 minute: Users are facing an issue with imported llava: I'm testing this (7b instruct) in text generation web ui and i noticed that the prompt. Available in a 7b model size, codeninja is adaptable for local runtime environments. Deepseek coder and codeninja are good 7b models for coding. Chatgpt can get very wordy sometimes, and. Hermes pro and starling are good chat models. Thebloke gguf model commit (made with llama.cpp commit 6744dbe) a9a924b 5 months. Users are facing an issue with imported llava: Using lm studio the simplest way to engage with codeninja is via the quantized versions on lm studio. This repo contains gguf format model files for beowulf's codeninja 1.0 openchat 7b. The tutorial demonstrates how to. We will need to develop model.yaml to easily define model capabilities (e.g. In lmstudio, we load the model codeninja 1.0 openchat 7b q4_k_m. Here are all example prompts easily to copy, adapt and use for yourself (external link, linkedin) and here is a handy pdf version of the cheat sheet (external link, bp) to take. This repo contains gguf format model files for beowulf's codeninja 1.0 openchat 7b. Known compatible clients / servers gptq models are currently supported on linux. These are the parameters and prompt i am using for llama.cpp: If there is a (eos) token anywhere in the text, it messes up. Are you sure you're using the right prompt format? Hermes pro and starling are good chat models. Gptq models for gpu inference, with multiple quantisation parameter options. Provided files, and awq parameters i currently release 128g gemm models only. Ensure you select the openchat preset, which incorporates the necessary prompt. Chatgpt can get very wordy sometimes, and. Thebloke gguf model commit (made with llama.cpp commit 6744dbe) a9a924b 5 months. This repo contains gguf format model files for beowulf's codeninja 1.0 openchat 7b. It focuses on leveraging python and the jinja2 templating engine to create flexible, reusable prompt structures that can incorporate dynamic content. We will need to develop model.yaml to easily define model capabilities (e.g.Add DARK_MODE in to your website darkmode CodeCodingJourney

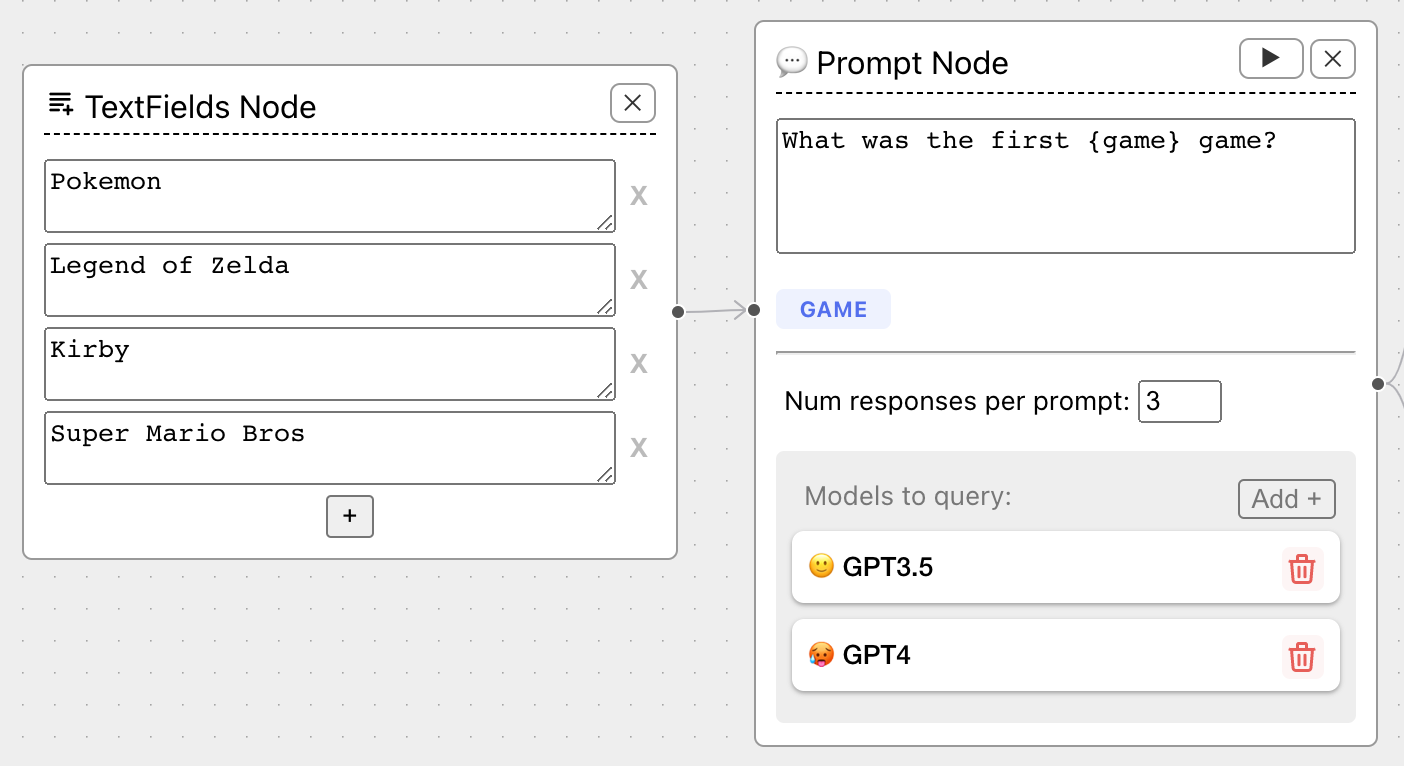

Prompt Templating Documentation

Custom Prompt Template Example from Docs can't instantiate abstract

Beowolx CodeNinja 1.0 OpenChat 7B a Hugging Face Space by hinata97

RTX 4060 Ti 16GB deepseek coder 6.7b instruct Q4 K M using KoboldCPP 1.

fe2plus/CodeLlama7bInstructhf_PROMPT_TUNING_CAUSAL_LM at main

How to use motion block in scratch Pt1 scratchprogramming codeninja

TheBloke/CodeNinja1.0OpenChat7BGPTQ · Hugging Face

TheBloke/CodeNinja1.0OpenChat7BAWQ · Hugging Face

CodeNinja An AIpowered LowCode Platform Built for Speed Intellyx

Using Lm Studio The Simplest Way To Engage With Codeninja Is Via The Quantized Versions On Lm Studio.

I Understand Getting The Right Prompt Format Is Critical For Better Answers.

Deepseek Coder And Codeninja Are Good 7B Models For Coding.

Available In A 7B Model Size, Codeninja Is Adaptable For Local Runtime Environments.

Related Post: